Updated documentation

Showing

+199 -54

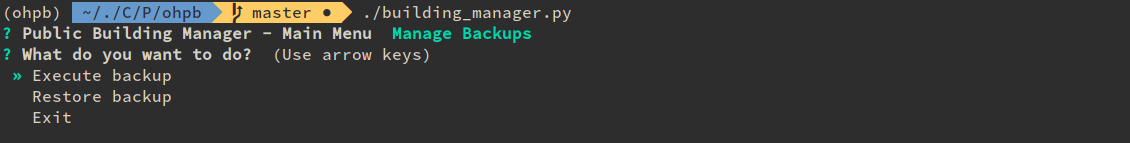

docs/images/backup_menu.png

0 → 100644

+ 0

- 0

23.9 KB

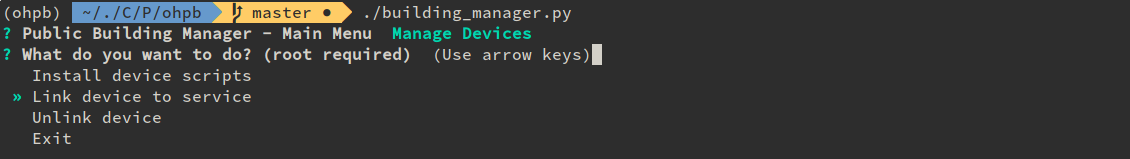

docs/images/device_menu.png

0 → 100644

+ 0

- 0

28.9 KB

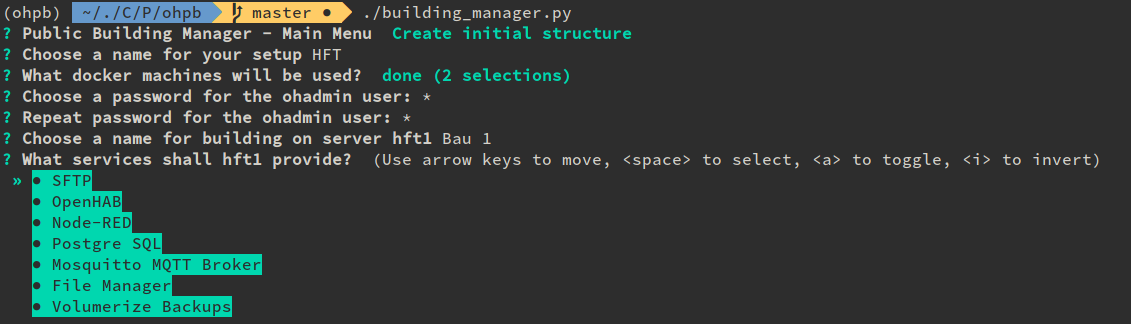

docs/images/init_menu.png

0 → 100644

+ 0

- 0

60.1 KB

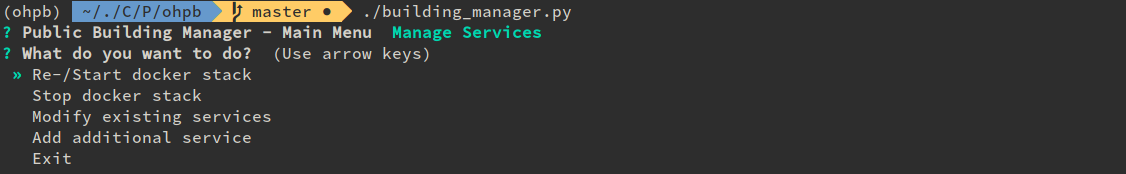

docs/images/service_menu.png

0 → 100644

+ 0

- 0

30.3 KB

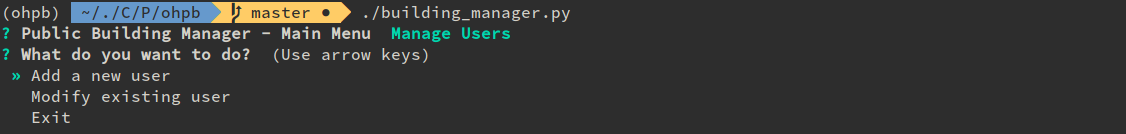

docs/images/user_menu.png

0 → 100644

+ 0

- 0

23.5 KB